Debian is moving the git hosting from alioth.debian.org, an instance of Fusionforge, to salsa.debian.org which is a Gitlab instance.

There is some background reading available on https://wiki.debian.org/Salsa/. This also has pointers to an import script to ease migration for people that move repositories. It's definitely worth hanging out in #alioth on oftc, too, to learn more about salsa / gitlab in case you have a persistent irc connection.

As of now() salsa has 15,320 projects, 2,655 users in 298 groups.

Alioth has 29,590 git repositories (which is roughly equivalent to a project in Gitlab), 30,498 users in 1,154 projects (which is roughly equivalent a group in Gitlab).

So we currently have 50% of the git repositories migrated. One month after leaving beta. This is very impressive.

As Alioth has naturally accumulated some cruft, Alexander Wirt (formorer) estimates that 80% of the repositories in use have already been migrated.

So it's time to update your local .git/config URLs!

Mehdi Dogguy has written nice scripts to ease handling salsa / gitlab via the (extensive and very well documented) API. Among them is list_projects that gets you nice overview of the projects in a specific group. This is especially true for the "Debian" group that contains the former collab-maint repositories, so source code that can and shall be maintained by Debian Developers collectively.

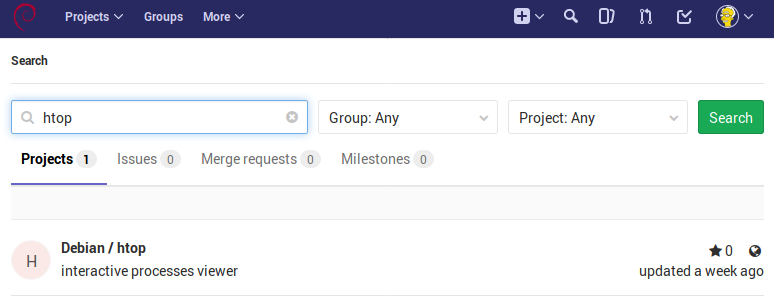

Finding migrated repositories

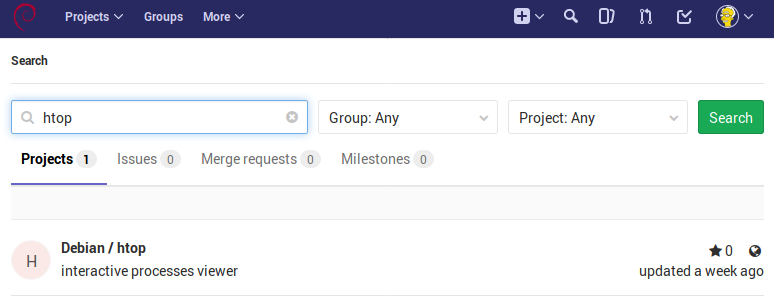

Salsa can search quite quickly via the Web UI: https://salsa.debian.org/search?utf8=✓&search=htop

but finding the URL to clone the repository from is more clicks and ~4MB of data each time (yeah, the modern web), so

$ curl --silent https://salsa.debian.org/api/v4/projects?search="htop" | jq .

[

{

"id": 9546,

"description": "interactive processes viewer",

"name": "htop",

"name_with_namespace": "Debian / htop",

"path": "htop",

"path_with_namespace": "debian/htop",

"created_at": "2018-02-05T12:44:35.017Z",

"default_branch": "master",

"tag_list": [],

"ssh_url_to_repo": "git@salsa.debian.org:debian/htop.git",

"http_url_to_repo": "https://salsa.debian.org/debian/htop.git",

"web_url": "https://salsa.debian.org/debian/htop",

"avatar_url": null,

"star_count": 0,

"forks_count": 0,

"last_activity_at": "2018-02-17T18:23:05.550Z"

}

]

is a bit nicer.

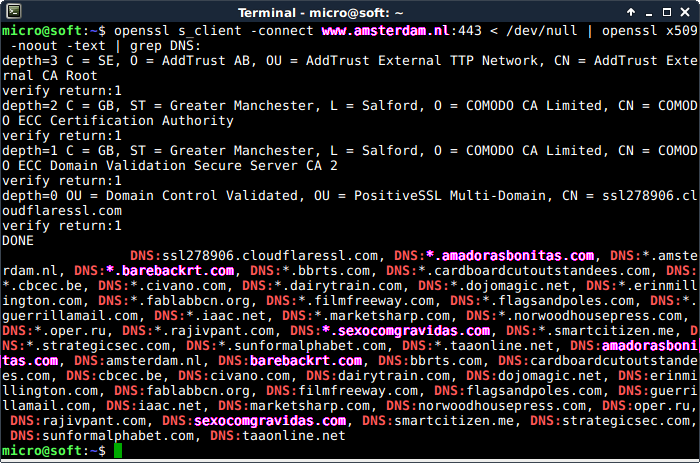

Please notice the git url format is a bit odd, it's either

git@salsa.debian.org:debian/htop.git or

ssh://git@salsa.debian.org/debian/htop.git.

Notice the ":" -> "/" after the hostname. Bit me once.

Finding repositories to update

At this time I found it useful to check which of the repositories I have cloned had not yet been updated in the local .git/config:

find ~/debconf ~/my_sources ~/shared -ipath '*.git/config' -exec grep -H 'url.*git\.debian' '{}' \;

Thanks to Jörg Jaspert (Ganneff) the Debconf repositories have all been moved to Salsa now.

Hint: Bug him for his scripts if you need to do complex moves.

Updating the URLs has been an hours work on my side and there is little you can do to speed that up if - as in the Debconf case - teams have used the opportunity to clean up and things are not as easy as using sed -i.

But there is no reason to do this more than once, so for the laptops...

Speeding up migration on multiple devices

rsync -armuvz --existing --include="*/" --include=".git/config" --exclude="*" ~/debconf/ laptop:debconf/

will rsync the .git/config files that you changed to other systems where you keep partial copies.

On these a simple git pull to get up to remote HEAD or using the git_pull_all one-liner from https://daniel-lange.com/archives/99-Managing-a-project-consisting-of-multiple-git-repositories.html will suffice.

Git short URL

Stefano Rivera (tumbleweed) shared this clever trick:

git config --global url."ssh://git@salsa.debian.org/".insteadOf salsa:

This way you can git clone salsa:debian/htop.

![]() .

.