Youtube has a really nice RSS feature that is extremely well hidden.

If you postfix a Channel ID to

https://www.youtube.com/feeds/videos.xml?channel_id=<id goes here>

you get a really nice Atom 1.0 (~RSS) feed for your feedreader.

Unfortunately the Channel ID is hard to find while you are navigating Youtube with usernames in the URL.

E.g. https://www.youtube.com/c/TED is TED's channel, full of interesting and worth-to-watch content (and some assorted horse toppings, of course).

But you have to read a lot of ugly HTML / JSON in that page to find and combine

https://www.youtube.com/feeds/videos.xml?channel_id=UCAuUUnT6oDeKwE6v1NGQxug

which is the related RSS feed.

Jeff Keeling wrote a simple Youtube RSS Extractor that does well if you have a ../playlist?... or a .../channel/... URL but it will (currently) fail on user name channels or Youtube landing pages.

So how do we get the Channel ID for a Youtube user we are interested to follow?

Youtube has a great API but that is gated by API keys even for the most simple calls (that came only with v3 of the API but the previous version is depreciated since 2015)1:

dl@laptop:~$ curl 'https://www.googleapis.com/youtube/v3/channels?part=contentDetails&forUsername=DebConfVideos'

{

"error": {

"code": 403,

"message": "The request is missing a valid API key.",

"errors": [

{

"message": "The request is missing a valid API key.",

"domain": "global",

"reason": "forbidden"

}

],

"status": "PERMISSION_DENIED"

}

}

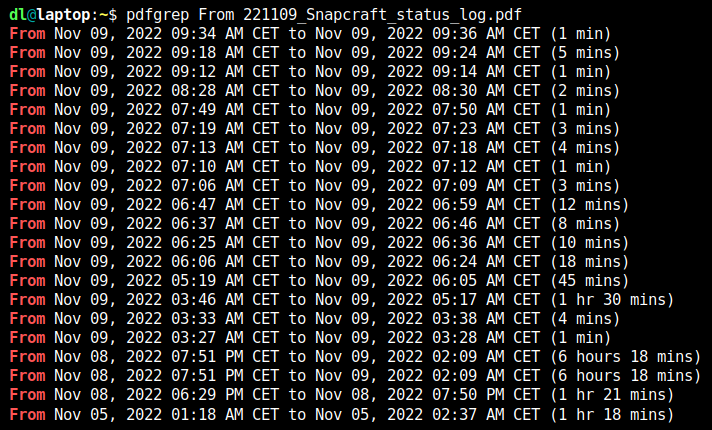

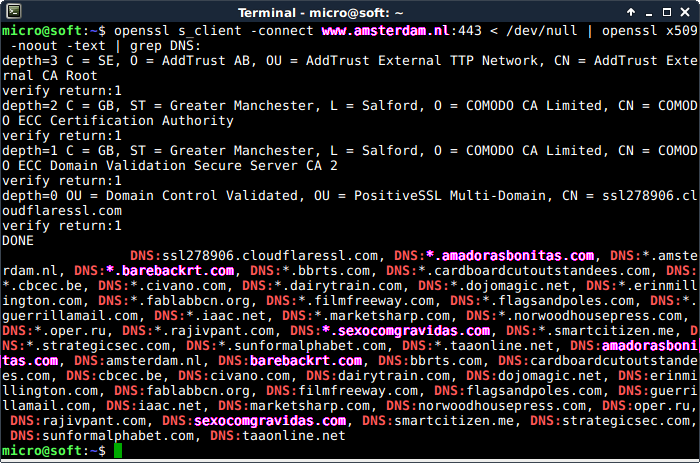

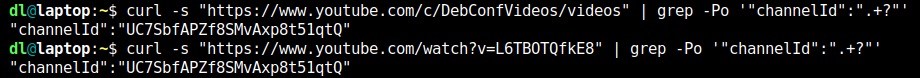

Luckily we can throw the same (example) user name DebConfVideos at curl and grep:

dl@laptop:~$ curl -s "https://www.youtube.com/c/DebConfVideos/videos" | grep -Po '"channelId":".+?"'

"channelId":"UC7SbfAPZf8SMvAxp8t51qtQ"

So https://www.youtube.com/feeds/videos.xml?channel_id=UC7SbfAPZf8SMvAxp8t51qtQ is the RSS feed for DebConfVideos.

We can use individual Youtube video URLs as well. With the hack above, it'll work to find us the Chanel ID from a Youtube video URL:

Now, some user pages may have multiple valid RSS feeds because they contain multiple channels.

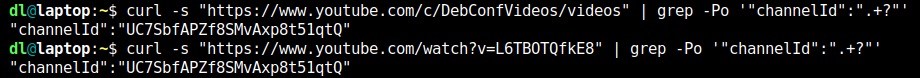

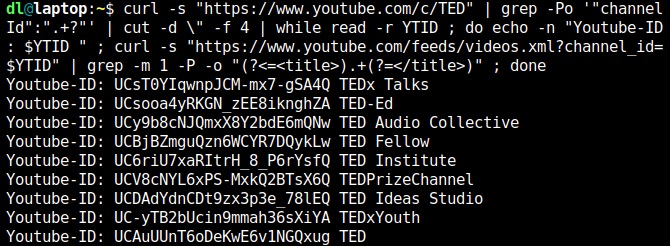

Remember the TED page from above? Well run:

dl@laptop:~$ curl -s "https://www.youtube.com/c/TED" | grep -Po '"channelId":".+?"' | cut -d \" -f 4 | while read -r YTID ; do echo -n "Youtube-ID: $YTID " ; curl -s "https://www.youtube.com/feeds/videos.xml?channel_id=$YTID" | grep -m 1 -P -o "(?<=<title>).+(?=</title>)" ; done

This will iterate through the Channel IDs found and show you the titles. That way you can assess which one you want to add to your feedreader.

You probably want the last Channel ID listed above, the non-selective "TED" one. And that's the one from the example above.

Update

02.06.2022:

smpl wrote in and has the much better solution for the most frequent use cases:

You can also use get a feed directly with a username:

https://www.youtube.com/feeds/videos.xml?user=<username>

The one I use most is the one for playlists (if creators remember to

use them).

https://www.youtube.com/feeds/videos.xml?playlist_id=<playlist id>

For the common case you don't even need the channel ID that way.

But it is also conveniently given in a <yt:channelId> tag (or the topmost <id> tag) within the Atom XML document.

Thanks, smpl!