Now it is a commonplace that security is hard. It involves advanced mathematics and a single, tiny mistake or omission in implementation can spoil everything.

And the only sane IT security can be open source security. Because you need to assess the algorithms and their implementation and you need to be able to completely verify the implementation. You simply can't if you don't have the code and can compile it yourself to produce a trusted (ideally reproducible) build. A no-brainer for everybody in the field.

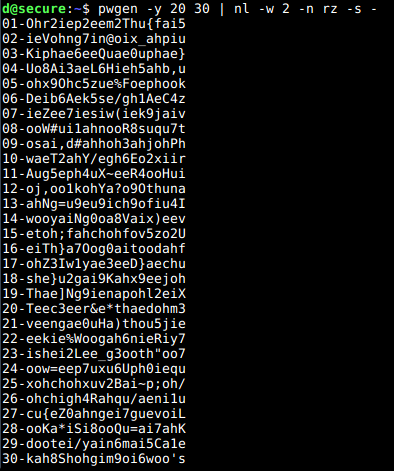

But we make it unbelievably hard for people to use security tools. Because these have grown over decades fostered by highly intelligent people with no interest in UX.

"It was hard to write, so it should be hard to use as well."

And then complain about adoption.

PGP / gpg has received quite some fire this year and the good news is this has resulted in funding for the sole gpg developer. Which will obviously not solve the UX problem.

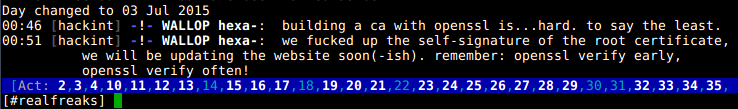

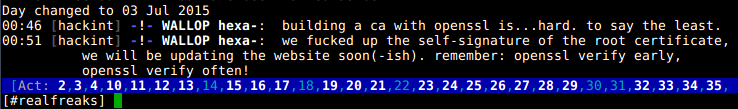

But the much worse offender is OpenSSL. It is so hard to use that even experienced hackers fail.

Now, securely encrypting a mass communication media like IRC is not possible at all.

Read Trust is not transitive: or why IRC over SSL is pointless1.

Still it makes wiretapping harder and that may be a good thing these days.

LibreSSL has forked the OpenSSL code base "with goals of modernizing the codebase, improving security, and applying best practice development processes". No UX improvement. A cleaner code for the chosen few. Duh.

I predict the re-implementations and gradual improvement scenarios will fail. The nearly-impossible-to-use-right situation with both gpg and (much more importantly) OpenSSL cannot be fixed by gradual improvements and however thorough code reviews.

Now the "there's an App for this" security movement won't work out on a grand scale either:

- Most often not open source. Notable exceptions: ChatSecure, TextSecure.

- No reference implementations with excellent test servers and well documented test suites but products. "Use my App.", "No, use MY App!!!".

- Only secures chat or email. So the VC-powered ("next WhatsApp") mass-adoption markets but not the really interesting things to improve upon (CA, code signing, FDE, ...).

- While everybody is focusing on mobile adoption the heavy lifting is still on servers. We need sane libraries and APIs. No App for that.

So we need a new development, a new code, a new open source product. Sadly so the Core Infrastructure Initiative so far only funds existing open source projects in dire needs and people bug hunting.

It basically makes the bad solutions of today a bit more secure and ensures maintenance of decade old crufty code bases. That way it extends the suffering of everybody using the inadequate solutions of today.

That's inevitable until we have a better stack but we need to look into getting rid of gpg and OpenSSL and replacing it with something new. Something designed well from the ground up, technically and from a user experience perspective.

Now who's in for a five year funding plan? $3m2 annually. ROCE 0. But a very good chance to get the OBE awarded.

Updates:

10.06.22:

Carl Tashian made a GUI mockup to show the complexity of the OpenSSL "user interface".

21.07.19:

A current essay on "The PGP problem" is making rounds and lists some valid issues with the file format, RFCs and the gpg implementation. The GnuPG-users mailing list has a discussion thread on the issues listed in the essay.

19.01.19:

Daniel Kahn Gillmor, a Senior Staff Technologist at the ACLU, tried to get his gpg key transition correct. He put a huge amount of thought and preparation into the transition. To support Autocrypt (another try to get GPG usable for more people than a small technical elite), he specifically created different identities for him as a person and his two main email addresses. Two days later he has to invalidate his new gpg key and back-off to less "modern" identity layouts because many of the brittle pieces of infrastructure around gpg from emacs to gpg signature management frontends to mailing list managers fell over dead.

28.11.18:

Changed the Quakenet link on why encrypting IRC is useless to an archive.org one as they have removed the original content.

13.03.17:

Chris Wellons writes about why GPG is a failure and created a small portable application Enchive to replace it for asymmetric encryption.

24.02.17:

Stefan Marsiske has written a blog article: On PGP. He argues about adversary models and when gpg is "probably" 3 still good enough to use. To me a security tool can never be a sane choice if the UI is so convoluted that only a chosen few stand at least a chance of using it correctly. Doesn't matter who or what your adversary is.

Stefan concludes his blog article:

PGP for encryption as in RFC 4880 should be retired, some sunk-cost-biases to be coped with, but we all should rejoice that the last 3-4 years had so much innovation in this field, that RFC 4880 is being rewritten[Citation needed] with many of the above in mind and that hopefully there'll be more and better tools. [..]

He gives an extensive list of tools he considers worth watching in his article. Go and check whether something in there looks like a possible replacement for gpg to you. Stefan also gave a talk on the OpenPGP conference 2016 with similar content, slides.

14.02.17:

James Stanley has written up a nice account of his two hour venture to get encrypted email set up. The process is speckled with bugs and inconsistent nomenclature capable of confusing even a technically inclined person. There has been no progress in the last ~two years since I wrote this piece. We're all still riding dead horses. James summarizes:

Encrypted email is nothing new (PGP was initially released in 1991 - 26 years ago!), but it still has a huge barrier to entry for anyone who isn't already familiar with how to use it.

04.09.16:

Greg Kroah-Hartman ends an analysis of the Evil32 PGP keyid collisions with:

gpg really is horrible to use and almost impossible to use correctly.

14.11.15:

Scott Ruoti, Jeff Andersen, Daniel Zappala and Kent Seamons of BYU, Utah, have analysed the usability [local mirror, 173kB] of Mailvelope, a webmail PGP/GPG add-on based on a Javascript PGP implementation. They describe the results as "disheartening":

In our study of 20 participants, grouped into 10 pairs of participants who attempted to exchange encrypted

email, only one pair was able to successfully complete the assigned tasks using Mailvelope. All other participants were

unable to complete the assigned task in the one hour allotted to the study. Even though a decade has passed since the last

formal study of PGP, our results show that Johnny has still not gotten any closer to encrypt his email using PGP.